Introduction

Text Detection is a major problem in optical character recognition (OCR) and there are various solutions attempted by different researchers. EAST is another attempt made for detection of Scenic Text detection that’s detection of text from images where background is normal street or billboard and model needs to detect text from that image. Later on, is realized that the same model can be very useful in text detection from scanned images as well.

EAST Model

EAST is a deep learning-based algorithm (EAST) that detects text with a single neural network with the elimination of multi-stage approaches. The key component in this proposed algorithm is a neural network model, which is trained to directly predict the existence of text instances and their geometries from full images. Also, since this model has been designed as a fully-convolutional neural network adapted for text detection, it outputs dense per-pixel predictions of words or text lines thus eliminating the need of intermediate steps which were there in traditional models such as candidate proposal, text region formation and word partition.

The key highlights of EAST (Efficient and Accurate Scene Text Detector) are as follows:

• They propose a scene text detection method that consists of two stages: a Fully Convolutional Network and an NMS merging stage. The FCN directly produces text regions, excluding redundant and time-consuming intermediate steps.

• The pipeline is flexible to produce either word level or line level predictions, whose geometric shapes can be rotated boxes or quadrangles, depending on specific applications.

• The proposed algorithm significantly outperforms state-of-the-art methods in both accuracy and speed.

If you want to go deeper into EAST and learn how you can do training as well using ICDAR SIROE Dataset then you can learn it with Live Demo.

these models work and are implemented then Coding exercises with live examples can be accessed at Code Implementation of Object Detection using CNN.

EAST Methodology

The key component in this proposed algorithm is a neural network model, which is trained to directly predict the existence of text instances and their geometries from full images. Also, since this model has been designed as a fully-convolutional neural network adapted for text detection, it outputs dense per-pixel predictions of words or text lines thus eliminating the need of intermediate steps which were there in traditional models such as candidate proposal, text region formation and word partition. Even, the post-processing steps only include thresholding and NMS on predicted geometric shapes.

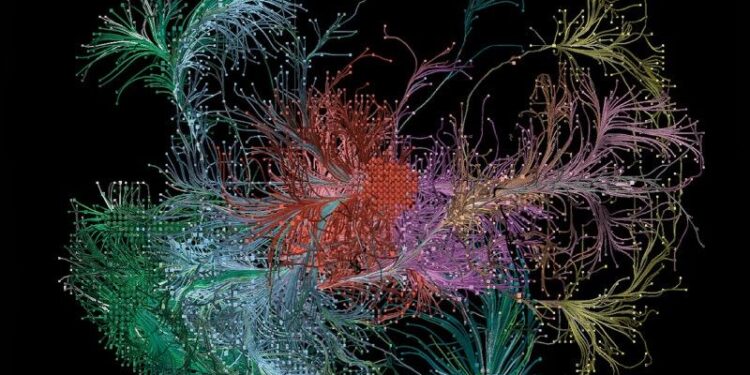

If we look at the EAST network architecture diagram on screen, it has 3 branches that combine into a single neural network.

These branches are:

Feature Extractor Stem – It is used to extract features from different layers of the network. Within this, the stem can be a convolutional network pre-trained on ImageNet dataset, with interleaving convolution and pooling layers. As we can see in the diagram, four levels of feature maps, denoted as f1, f2, f3 and f4 are extracted from the stem.

Feature Merging Branch – This branch of the EAST network merges the feature outputs from a different layer of the VGG16 network. The input image is passed through the VGG16 model and outputs from different four layers of VGG16 are taken and then feature maps are merged using U-net architecture. So, h1, h2, h3 and h4 are the merged feature maps.

Output Layer – The output layer consists of a score map and a geometry map. The score map tells us the probability of text in that region while the geometry map defines the boundary of the text box. This geometry map can be either a RBOX also called as rotated box or QUAD that is quadrangle. A rotated box consists of top-left coordinate, width, height and rotation angle for the text box. While quadrangle consists of all four coordinates of a rectangle.

EAST Implementation

This implementation has the following features:

- Only RBOX part is implemented

- A fast Locality-Aware NMS in C++ provided by the paper’s author

- The pre-trained model provided achieves 80.83 F1-score on ICDAR 2015 Incidental Scene Text Detection Challenge using only training images from ICDAR 2015 and 2013

There are few differences from paper in the final implementation of paper which has been listed below:

- Use dice loss (optimize IoU of segmentation) rather than balanced cross entropy

- Use ResNet-50 rather than PVANETUse linear learning rate decay rather than staged learning rate decay

We will now understand the steps that we need to follow for EAST Implementation:

Step 1. Clone the repository using following command in Ubuntu environment,

git clone https://github.com/indiantechwarrior/EAST

Step 2. cd EAST

pip install -r requirements.txt (or pip3 install -r requirements.txt)

Make sure you have tensorflow==1.15.0 installed, command for same is,

pip install tensorflow==1.15.0 (or pip3 install tensorflow==1.15.0)

Running EAST with pretrained model

Step 1. Download pre-trained model

The Pre-Trained Model can be downloaded from Google Drive

https://drive.google.com/file/d/0B3APw5BZJ67ETHNPaU9xUkVoV0U/view

Step 2. Create a tmp folder, unzip the Pre-Trained model in this, create ‘images’ and ‘output’ folder within tmp folder

Step 3. Execute the python code

python eval.py --test_data_path=/content/EAST/tmp/images/ --gpu_list=0 --checkpoint_path==/content/EAST/tmp/east_icdar2015_resnet_v1_50_rbox/ --output_dir=/content/EAST/tmp/output/Step 4. the results will be saved in tmp/output

Training own EAST model

Download

- Models trained on ICDAR 2013 (training set) + ICDAR 2015 (training set): GoogleDrive

- Resnet V1 50 provided by tensorflow slim: slim resnet v1 50

Train

If you want to train the model, you should provide the dataset path, in the dataset path, a separate gt text file should be provided for each image and run

python multigpu_train.py --gpu_list=0 --input_size=512 --batch_size_per_gpu=8 --checkpoint_path=/content/EAST/tmp/east_icdar2015_resnet_v1_50_rbox/ --text_scale=512 --training_data_path=/content/EAST/data/sroie_train/ --geometry=RBOX --learning_rate=0.0001 --num_readers=4 --pretrained_model_path=/content/EAST/data/resnet_v1_50.ckptIf you have more than one gpu, you can pass gpu ids to gpu_list(like –gpu_list=0,1,2,3)

Note: You should change the gt text file of icdar2015’s filename to img_*.txt instead of gt_img_*.txt(or you can change the code in icdar.py), and some extra characters should be removed from the file. See the examples in training_samples/

This executable line is updated with reduced num_readers and batch_size_per_gpu, and code is running on top of pre-trained checkpoints. Update current checkpoint file path in ‘checkpoint’ file in east_icdar2015_resnet_v1_50_rbox folder

Step 1. Download the pre-trained model of VGG16 and put it in data/vgg_16.ckpt. you can download it from tensorflow/models

Step 2. Download the dataset we prepared from google drive put the downloaded data in data/dataset/mlt, then start the training.

Step 3. Also, you can prepare your own dataset according to the following steps:

Step 4. Modify the DATA_FOLDER and OUTPUT in utils/prepare/split_label.py according to your dataset. And run split_label.py in the root

python ./utils/prepare/split_label.py

it will generate the prepared data in data/dataset/

Step 5. The input file format demo of split_label.py can be found in gt_img_859.txt. And the output file of split_label.py is img_859.txt.

Step 6. Modify path for DATA_FOLDER in in utils/dataset/data_provider.py

Step 7. For using pre- trained checkpoints and training your dataset on top of it, update max_steps (60000) in main/train.py to higher number, adding 10000 normally is a good starting point.

Step 8. Execute

python ./main/train.py

Step 9. Post completion of training you will find updated checkpoints which can than be utilized for training, note for using new checkpoints don’t forget to update filename in ‘checkpoint’ file (originally this came in handy with downloaded pre- trained checkpoints)

Now you can run python ./main/demo.py to validate result on updated checkpoints